How to Analyze Qualitative Research Data: Step-by-Step Guide

Learn how to analyze qualitative research data effectively with our comprehensive, easy-to-follow guide. Improve your research skills today!

Kate, Praveen

December 6, 2023

Quindi hai raccolto le tue interviste, concluso i tuoi focus group e ora ti ritrovi con una montagna di testo grezzo. Cosa c'è dopo? È qui che avviene la vera magia della ricerca qualitativa: trasformare tutti quei dati grezzi — trascrizioni, note sul campo e osservazioni — in intuizioni genuine e credibili.

L'intero scopo dell'analisi qualitativa è organizzare e interpretare sistematicamente tutte queste informazioni non numeriche. Si tratta meno di trovare una singola risposta "corretta" e più di tessere insieme una storia avvincente e supportata da prove da ciò che i tuoi partecipanti hanno condiviso.

Preparare il terreno per un'analisi significativa

Prima di poter estrarre la storia nascosta nei tuoi dati, devi prima capirne il linguaggio. Pensa all'analisi qualitativa come a un mestiere interpretativo. Stai scavando nel contesto, nelle motivazioni e nelle narrazioni che vivono all'interno delle tue trascrizioni. È un viaggio di scoperta, non solo una lista di controllo procedurale.

Entrare nella mentalità giusta è fondamentale. Dico sempre alle persone di pensare a se stesse come a un detective che ricompone gli indizi, non come a uno scienziato che conduce un esperimento sterile. Il tuo primo compito è familiarizzare profondamente con i dati: le leggere esitazioni nel parlare, le frasi che continuano a spuntare, le emozioni appena sotto la superficie. Questo tipo di immersione è ciò che separa un riassunto superficiale da un'analisi veramente profonda.

Questa fase iniziale richiede anche una meticolosa preparazione dei dati. Non puoi costruire un caso solido su fondamenta traballanti, e trascrizioni pulite e ben organizzate sono quelle fondamenta. Se stai lavorando con interviste, ad esempio, ottenere trascrizioni corrette è non negoziabile. Utilizzare un servizio affidabile per la trascrizione di interviste e focus group può salvarti un sacco di grattacapi e impedirti di fraintendere qualcosa di cruciale in seguito.

Core Features That Save You Time

IA all'avanguardia

Alimentato da Whisper di OpenAI per una precisione leader nel settore. Supporto per vocabolari personalizzati, file fino a 10 ore e risultati ultra rapidi.

Importa da più fonti

Importa file audio e video da varie fonti tra cui caricamento diretto, Google Drive, Dropbox, URL, Zoom e altro.

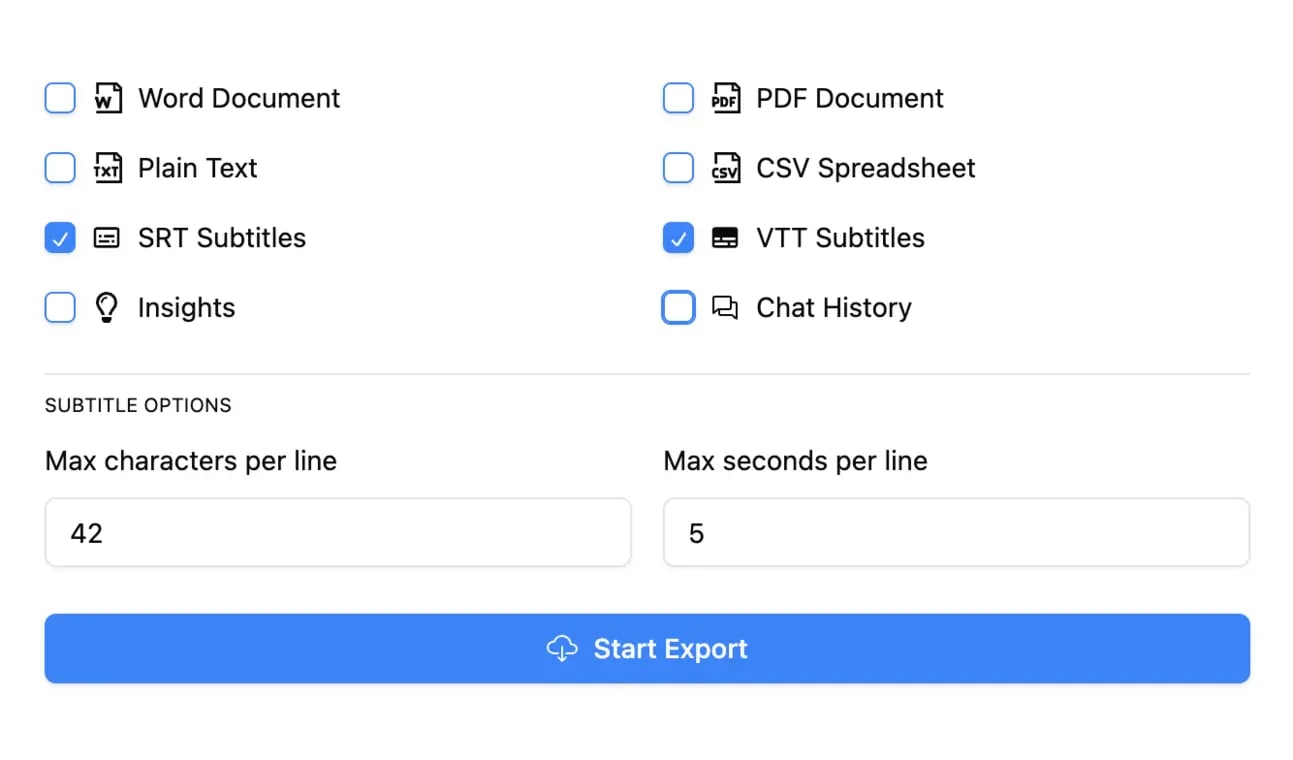

Esporta in più formati

Esporta le tue trascrizioni in più formati tra cui TXT, DOCX, PDF, SRT e VTT con opzioni di formattazione personalizzabili.

Scegliere il tuo metodo

Una volta che i tuoi dati sono pronti, è il momento di decidere il tuo approccio analitico. Il metodo che scegli modellerà il modo in cui interagisci con i dati e, in definitiva, il tipo di intuizioni che sarai in grado di generare.

È importante ricordare che l'analisi qualitativa non è sempre una linea retta. È spesso ciclica e iterativa, dove la tua analisi iniziale potrebbe effettivamente riportarti a raccogliere più dati.

Come mostra questo diagramma, l'analisi non è solo il passo finale. È una conversazione continua con i tuoi dati che ti aiuta a perfezionare le tue domande di ricerca man mano che procedi.

Ci sono molti modi per analizzare i dati qualitativi, ma la maggior parte dei ricercatori si affida a uno dei cinque metodi principali: analisi tematica, analisi del contenuto, teoria fondata, analisi del discorso o analisi narrativa. Ognuno ha uno scopo diverso ed è più adatto a determinati obiettivi di ricerca.

Scegliere il tuo metodo di analisi qualitativa

Questa tabella offre un rapido confronto per aiutarti a capire quale strategia ha più senso per il tuo progetto.

| Metodo | Obiettivo primario | Ideale per |

|---|---|---|

| Analisi tematica | Identificare e riportare schemi (temi) all'interno dei dati. | Rispondere a "Quali sono le idee comuni qui?" Molto flessibile e ottimo per i principianti. |

| Analisi del contenuto | Quantificare e contare la presenza di parole o concetti specifici. | Rispondere a "Quante volte è stato menzionato 'supporto' in modo negativo?" |

| Teoria fondata | Sviluppare una nuova teoria "fondata" sui dati stessi. | Esplorare un'area nuova in cui esiste poca teoria e costruire un modello da zero. |

| Analisi del discorso | Analizzare come viene utilizzata la lingua nei contesti sociali. | Comprendere come potere, identità e norme sociali vengono costruiti attraverso il parlato. |

| Analisi narrativa | Comprendere come le persone costruiscono storie e danno un senso alle loro vite. | Esaminare le esperienze individuali attraverso la lente di una storia completa (trama, personaggi, ecc.). |

Prendersi un momento per scegliere il metodo giusto in anticipo rende l'intero processo più strutturato e gestibile. Assicura che la tua analisi affronti direttamente i tuoi obiettivi di ricerca.

Key Insights for Researchers

Remember, qualitative analysis is iterative. Your early findings might reshape your research questions.

L'obiettivo non è solo riassumere, ma interpretare. La tua analisi dovrebbe rispondere alla domanda "E allora?", spiegando perché i tuoi risultati sono importanti e cosa significano in un contesto più ampio.

In definitiva, scegliere il percorso giusto fin dall'inizio ti aiuta a trasformare un complesso mucchio di testo in una storia chiara, focalizzata e perspicace.

Preparare i tuoi dati per l'analisi

Un'analisi fantastica non avviene per caso. Inizia ben prima ancora di pensare ad applicare il tuo primo codice. Il vero lavoro inizia con la meticolosa preparazione delle tue materie prime: le trascrizioni delle interviste, gli appunti sul campo e quelle risposte aperte dei sondaggi.

Pensala come uno chef che fa la sua mise en place. Tutto deve essere preparato e perfettamente organizzato prima che inizi la vera cucina. Fare bene questa fase è non negoziabile se vuoi ottenere intuizioni di cui ti puoi fidare davvero.

Al centro di questo processo c'è una trascrizione accurata. Sembra un compito amministrativo, ma la tua trascrizione è il tuo set di dati primario. Una singola parola sentita male o una frase mancante possono distorcere completamente la storia di un partecipante e portare la tua intera analisi sulla strada sbagliata.

Trascrivere manualmente l'audio è una fatica lenta e dolorosa: chiunque l'abbia fatta lo sa. Se ti trovi di fronte a ore di interviste, fai un favore a te stesso e trova uno strumento affidabile per trascrivere gratuitamente il tuo audio in testo. Può farti risparmiare decine di ore e darti un punto di partenza molto più accurato. Ma ricorda, una buona trascrizione è più delle semplici parole.

È più delle semplici parole su una pagina

Una trascrizione di base ti dà il "cosa", ma una veramente ricca cattura il "come". Gran parte della comunicazione umana avviene negli spazi silenziosi tra le parole. Per avere davvero un quadro completo, le tue trascrizioni devono includere più del semplice dialogo.

Mi assicuro sempre di aggiungere annotazioni per cose come:

- Pause significative: Un lungo silenzio può significare qualsiasi cosa, dalla profonda riflessione al serio disagio. È un indizio.

- Tono emotivo: Annota le risate, i sospiri o qualsiasi cambiamento nell'energia vocale. Spesso uso semplici tag come [voce incrinata] o [parlando più velocemente].

- Segnali non verbali: Se hai un video o eri nella stanza, annota il linguaggio del corpo importante. Una scrollata di spalle, un cenno del capo, appoggiarsi all'indietro: tutto questo fa parte dei dati.

Questi piccoli dettagli aggiungono strati critici di significato che un semplice file di testo perde completamente. Un partecipante che dice "Sto bene" significa due cose totalmente diverse se è seguito da una risata rispetto a un pesante sospiro.

La mia regola personale è questa: se è sembrato importante nel momento, appartiene alla trascrizione. Non dubitare della tua intuizione durante l'intervista; quella sensazione viscerale è spesso la tua prima scintilla analitica.

Dal caos alla chiarezza

Una volta che le tue trascrizioni sono ricche e pronte, la prossima bestia da affrontare è l'organizzazione. È facile sentirsi sepolti quando si guardano potenzialmente centinaia di pagine di testo. L'obiettivo qui è costruire un sistema che renda i tuoi dati accessibili, non opprimenti.

Una strategia semplice ma incredibilmente efficace è creare un documento master o un foglio di calcolo. Pensalo come un inventario di tutte le tue fonti di dati. Includo colonne per un ID partecipante, la data, il tipo di dati (intervista, appunto sul campo, ecc.) e un breve riassunto. Questo da solo ti impedirà di perdere di vista un file cruciale.

L'ultimo, e forse più importante, passo di preparazione è quello che chiamo immersione nei dati. Questo significa leggere. E poi rileggere. Leggi tutto senza la pressione di iniziare ad analizzare. Lascia semplicemente che le storie, le frasi e le idee ricorrenti ti travolgano. È questa profonda familiarità che permette ai modelli di emergere quando finalmente inizi a codificare.

Dal testo grezzo a codici attuabili

Una volta che hai dedicato del tempo all'immersione nei dati, è ora di iniziare a dare un senso al caos. È qui che entra in gioco la codifica, il processo di suddivisione di tutto quel testo grezzo in piccoli pezzi di significato etichettati. Pensala come la creazione di un indice dettagliato per i tuoi dati; ogni codice è un tag che cattura una singola idea, concetto o emozione.

La codifica è davvero dove inizia l'analisi. È il passo fondamentale che ti porta da una trascrizione densa e intimidatoria a un insieme strutturato di idee iniziali. Non si tratta solo di riassumere; si tratta di decostruire sistematicamente il testo per vederne i blocchi costitutivi.

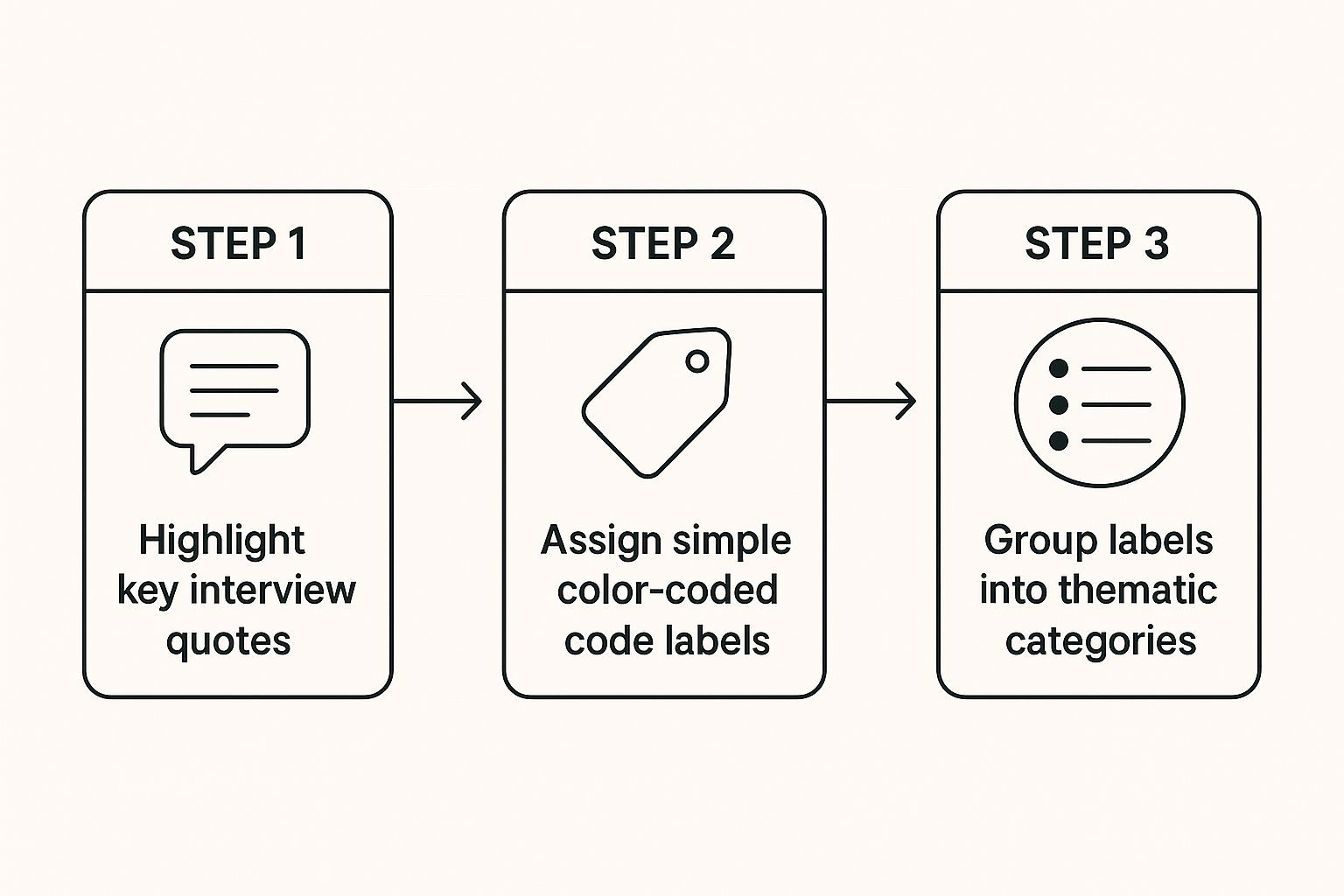

Questo flusso visivo mostra come puoi passare dal testo evidenziato ai codici iniziali, e poi raggruppare quei codici in categorie più ampie.

L'infografica arriva al cuore della codifica: individuare affermazioni significative e assegnare etichette che le preparano per il raggruppamento tematico.

Iniziare con una lavagna pulita

Uno dei modi più comuni per iniziare è con la codifica aperta, dove inizi senza preconcetti. Leggi semplicemente i dati riga per riga e crei codici basati su ciò che salta fuori direttamente dal testo. È un processo induttivo, dal basso verso l'alto, perfetto per la ricerca esplorativa quando non sai ancora quali modelli troverai.

Ad esempio, se stai analizzando le trascrizioni di interviste sulla soddisfazione sul posto di lavoro, potresti trovare codici come:

- "Sentirsi valorizzati dalla direzione"

- "Frustrazione per strumenti obsoleti"

- "Collaborazione positiva del team"

Il trucco è rimanere vicini ai dati, usando le parole dei partecipanti stessi o semplici frasi descrittive.

Utilizzare un framework predefinito

D'altra parte, potresti usare la codifica deduttiva. Questo approccio è molto utile quando hai già una teoria o un framework che vuoi testare. Inizi con un elenco di codici predeterminati e poi cerchi prove di essi nei tuoi dati.

Immagina di analizzare il feedback dei clienti utilizzando un modello di servizio clienti noto. I tuoi codici iniziali potrebbero includere "Reattività", "Affidabilità" ed "Empatia". Questo metodo è molto più strutturato ed efficiente per confermare o sfidare idee che hai già. Per un'analisi più approfondita dell'applicazione di queste tecniche, la nostra guida su come analizzare i dati delle interviste fornisce esempi più specifici.

Indipendentemente dall'approccio scelto, l'obiettivo è lo stesso: creare un insieme di etichette coerenti e significative che puoi applicare all'intero set di dati. Questo processo è raramente lineare; probabilmente rivedrai, unirai e dividerai i codici man mano che la tua comprensione si approfondisce.

Mantenere la tua analisi coerente

Man mano che inizi a sviluppare i tuoi codici, è assolutamente essenziale creare un codebook. Questo è un documento centrale che definisce ogni codice e fornisce regole chiare su quando applicarlo. Un codebook solido include:

- Nome del codice: Un'etichetta breve e descrittiva (ad es. "Vincoli di risorse").

- Definizione completa: Una spiegazione dettagliata di cosa significa il codice nel contesto del tuo studio.

- Criteri di inclusione/esclusione: Regole specifiche su cosa dovrebbe e non dovrebbe essere assegnato a questo codice.

- Esempio di citazione: Un esempio chiaro dai tuoi dati che illustra il codice in azione.

Questo documento diventa la tua stella polare analitica. Assicura che tu (e chiunque altro nel tuo team) applichiate i codici in modo coerente, il che rende i tuoi risultati molto più affidabili e difendibili. Ti costringe anche a pensare in modo critico alle tue etichette e previene la "deriva del codificatore", dove il significato di un codice cambia lentamente nel tempo.

Advanced Tools for Deeper Insights

Rilevamento dei parlanti

Identifica automaticamente diversi parlanti nelle tue registrazioni e etichettali con i loro nomi.

Strumenti di modifica

Modifica le trascrizioni con strumenti potenti tra cui trova e sostituisci, assegnazione dei parlanti, formati di testo arricchito ed evidenziazione.

Riassunti e Chatbot

Genera riassunti e altri approfondimenti dalla tua trascrizione, prompt personalizzati riutilizzabili e chatbot per i tuoi contenuti.

Scoprire i Temi Centrali nei Tuoi Dati

Hai fatto il duro lavoro di codificare i tuoi dati, creando essenzialmente un indice dettagliato di ogni idea nelle tue trascrizioni. Ora è il momento di fare un passo indietro. Questa è la parte in cui passi dalla semplice etichettatura alla vera interpretazione, collegando i punti tra i tuoi codici per trovare i temi ampi e generali.

Non si tratta di ordinare ordinatamente i tuoi codici in pile. La vera analisi tematica è dove scopri le relazioni e i modelli che raccontano una storia avvincente. È il momento in cui i tuoi dati grezzi iniziano a rivelare intuizioni potenti e strategiche.

Dai Codici ai Concetti

Il primo vero passo per trovare i temi è iniziare a raggruppare i tuoi codici correlati. Disponili tutti dove puoi vederli: sono un fan dell'uso di post-it su un muro, ma una lavagna digitale o una mappa mentale funzionano altrettanto bene. Mettili semplicemente lì fuori e cerca codici che sembrano connessi o che sembrano puntare alla stessa idea sottostante.

Ad esempio, immagina di analizzare interviste sul lavoro da remoto. Potresti avere codici come "stanchezza da Zoom", "mancanza di chiacchiere informali" e "difficoltà nella collaborazione su compiti complessi".

Ognuno è un'osservazione specifica. Ma quando li guardi insieme, iniziano a formare un contenitore concettuale più ampio. Potresti inizialmente chiamare questo gruppo qualcosa come "Sfide della Collaborazione Virtuale".

Onestamente, questo processo è sempre un po' disordinato e ci tornerai sopra un paio di volte. Sposterai i codici, creerai nuovi cluster e rinominerai i tuoi gruppi man mano che acquisisci una migliore comprensione dei dati. L'obiettivo non è renderlo perfetto al primo tentativo, ma iniziare a vedere come tutti quei singoli punti dati si collegano a idee più grandi.

Visualizzare le Connessioni

A volte, fissare un elenco di codici può sembrare piuttosto poco stimolante. È qui che le tecniche visive possono cambiare completamente le carte in tavola, specialmente se stai cercando di capire come analizzare i dati di ricerca qualitativa in modo più intuitivo.

Due dei miei metodi preferiti sono il diagramma di affinità e il mind mapping.

- Diagramma di Affinità: Questo è il classico metodo dei post-it. Scrivi ogni codice su una nota separata e spostali fisicamente su un muro o una lavagna. Inizia a raggrupparli in base al tuo istinto senza pensarci troppo. Questo approccio pratico spesso rivela connessioni che perderesti completamente in un foglio di calcolo.

- Mind Mapping: Inizia con una domanda di ricerca centrale o un codice importante al centro di una pagina, quindi diramati con codici e idee correlate. Questo è ottimo per visualizzare le relazioni gerarchiche, aiutandoti a vedere quali idee sono centrali e quali sono solo dettagli di supporto.

Questi metodi visivi ti aiutano a uscire dalla mentalità lineare basata sul testo e a pensare in modo più spaziale e creativo.

I tuoi temi devono fare più che semplicemente riassumere i dati; devono interpretarli. Un tema solido ha una narrazione. Fa un'argomentazione o offre un punto di vista sui tuoi dati, rispondendo all'importantissima domanda "e allora?".

Mettere alla Prova i Tuoi Temi

Una volta che hai un insieme di temi potenziali, devi essere duro con loro. Un tema è forte solo quanto le prove che lo supportano. Per ognuno, poniti alcune domande critiche:

- È distinto? Questo tema cattura un'idea unica, o si sovrappone troppo a un altro? Se sono troppo vicini, potresti doverli unire o affinare le tue definizioni.

- È ben supportato? Riesci a estrarre diverse citazioni convincenti o estratti di dati che danno vita chiaramente a questo tema? Se fai fatica a trovare buone prove, probabilmente non è un vero tema.

- Risponde alla domanda di ricerca? Ogni singolo tema dovrebbe aiutarti direttamente a rispondere ai tuoi obiettivi di ricerca principali. Se non lo fa, potrebbe essere un'interessante nota a margine, ma non è una scoperta centrale.

Sfida sistematicamente i tuoi temi emergenti, ti assicuri che la tua analisi finale non sia solo una raccolta di idee casuali, ma una storia coerente e difendibile saldamente radicata nei tuoi dati. Questo è ciò che riguarda l'analisi qualitativa robusta.

Utilizzare Strumenti Moderni per Semplificare la Tua Analisi

L'immagine classica di un ricercatore qualitativo è qualcuno circondato da evidenziatori e un muro tappezzato di post-it. Sebbene quel metodo abbia ancora il suo posto, il software giusto può cambiare completamente le carte in tavola.

Passare dall'analisi manuale agli strumenti moderni non significa solo risparmiare tempo. Apre modi completamente nuovi di vedere i tuoi dati, specialmente quando hai a che fare con un set di dati ampio o particolarmente complesso.

Il software specializzato per l'analisi dei dati qualitativi (o QDAS) agisce essenzialmente come un centro di comando per la tua ricerca. Pensa a piattaforme come NVivo o MAXQDA come banchi di lavoro digitali. Sono costruiti per aiutarti a gestire centinaia di pagine di trascrizioni, organizzare migliaia di codici individuali e visualizzare le intricate relazioni tra di essi. È qui che passi dal semplice ordinamento al riconoscimento di pattern genuino e complesso.

Scegliere il Tuo Toolkit

Non hai sempre bisogno di tirare fuori i pezzi grossi. Per progetti più piccoli, diciamo una dozzina di interviste circa, un foglio di calcolo ben organizzato in Google Sheets o Excel può essere sorprendentemente efficace. Puoi facilmente impostare colonne per citazioni, codici e memo per mantenere la tua analisi iniziale pulita e lineare.

Ma man mano che il tuo set di dati cresce, il valore del software dedicato diventa impossibile da ignorare.

- QDAS (NVivo, MAXQDA): Questi strumenti sono progettati per gestire enormi volumi di dati testuali, audio e persino video. Il loro vero potere risiede nel collegare i codici ai temi e quindi collegare quei temi alle informazioni demografiche dei partecipanti. Questo ti permette di porre domande complesse come: "Come hanno parlato manager e personale junior dell'equilibrio tra lavoro e vita privata?".

- Piattaforme basate sull'IA: Una nuova ondata di strumenti sta ora incorporando l'IA per aiutare in attività come la trascrizione automatica e persino suggerire codici iniziali. Spesso possono individuare modelli di alto livello o tendenze di sentiment che potresti perdere durante una prima analisi manuale.

Questo screenshot dell'interfaccia di MAXQDA mostra come un ricercatore può vedere una trascrizione, applicare tag codificati a colori e visualizzare l'intero sistema di codici in un'unica finestra.

La vera magia sta nell'integrazione. I tuoi dati, i tuoi codici e le tue note analitiche vivono tutti nello stesso posto. Questo crea una traccia di controllo chiara e difendibile che mostra esattamente come sei passato dai dati grezzi alla tua interpretazione finale.

Il miglior strumento non sostituisce il tuo pensiero critico, lo supporta. Il software aiuta a gestire la complessità in modo che tu possa concentrarti sul compito unicamente umano di interpretazione e creazione di significato.

Integrare Elementi AI e Quantitativi

Il ruolo della tecnologia nell'analisi qualitativa è in continua evoluzione e vediamo sempre più ricercatori combinare approcci che prima erano totalmente separati.

Uno degli sviluppi più interessanti è l'uso di strumenti statistici per gestire e interpretare i risultati. Anche se la ricerca è intrinsecamente non numerica, l'introduzione di metodi come le statistiche descrittive può aiutarti a individuare modelli nei dati qualitativi che hanno elementi quantitativi. Ad esempio, potresti contare la frequenza di determinati codici in diversi gruppi di partecipanti.

E mentre la nostra attenzione qui è sui metodi qualitativi, dare un'occhiata a campi adiacenti come l'analisi dei dati nell'istruzione superiore può offrire nuove idee su come gestire grandi set di dati con strumenti moderni.

In definitiva, sia che tu utilizzi un semplice foglio di calcolo o un software AI avanzato, tutto inizia con una trascrizione accurata. Questa è la base. Per farlo bene, dai un'occhiata alla nostra recensione del miglior software di trascrizione delle riunioni per trovare uno strumento che si adatti al tuo flusso di lavoro.

👥 Who Benefits Most From Modern Transcription Tools?

📚 Researchers

Quickly organize large interview sets into meaningful insights.

📰 Journalists

Pull quotes and verify facts in minutes, not hours.

🏢 Teams & Businesses

Share transcripts across departments for better collaboration.

🎙️ Creators

Repurpose interviews into podcasts, blogs, or social content.

Domande Frequenti sull'Analisi Qualitativa

Addentrarsi nell'analisi qualitativa solleva sempre alcune domande spinose, anche per chi la pratica da anni. È del tutto normale confrontarsi con questioni come il bias, la dimensione del campione e quale metodo sia davvero quello giusto. Affrontiamo alcune delle più comuni.

Uno dei maggiori ostacoli è la nostra soggettività. In quanto ricercatore, tu sei lo strumento analitico, il che significa che la tua prospettiva personale colora inevitabilmente il modo in cui vedi i dati. Cercare di essere un osservatore completamente neutrale è una battaglia persa. L'obiettivo reale non è una falsa sensazione di oggettività, ma la trasparenza.

Ho l'abitudine di tenere un "diario del ricercatore" per ogni singolo progetto. In esso, annoto le mie ipotesi iniziali prima ancora di iniziare, le mie reazioni istintive durante un'intervista o le idee che mi vengono in mente lungo il percorso. Questa semplice pratica mi aiuta a vedere i miei bias in modo da poterli mettere consapevolmente da parte e concentrarmi su ciò che i dati stanno effettivamente dicendo. Si tratta di autoconsapevolezza.

Determinare la Dimensione del Campione Corretta

Un'altra domanda che sento sempre è: "Quindi, quante interviste effettivamente servono?" A differenza della ricerca quantitativa, non esiste un numero magico. La risposta è un concetto chiamato saturazione.

Hai raggiunto la saturazione quando continui a raccogliere dati ma non senti più nulla di nuovo. Le storie iniziano a suonare familiari, gli stessi temi emergono ancora e ancora, e senti che le tue categorie analitiche sono solide. Per uno studio strettamente focalizzato, questo potrebbe accadere dopo solo 12-15 interviste. Per un argomento più vasto e complesso, potresti averne bisogno di più.

Non fissarti su un numero target prima di iniziare. Tutto dipende dalla ricchezza dei dati. Un'intervista veramente illuminante e profonda può valere più di tre interviste superficiali. La qualità dei tuoi partecipanti e la tua abilità come intervistatore contano molto di più del conteggio finale.

Combinare Diversi Metodi di Analisi

Infine, le persone si chiedono spesso se sia lecito mescolare e abbinare metodi di analisi. La risposta è un grande sì, purché ci sia una buona ragione. Questo approccio, noto come triangolazione metodologica, può rafforzare seriamente i tuoi risultati.

Ad esempio, potresti usare l'analisi tematica per avere una visione d'insieme dei pattern in tutte le tue interviste, quindi concentrarti su alcune trascrizioni specifiche con l'analisi narrativa per approfondire veramente le storie individuali.

Ecco come potresti approcciare:

- Inizia in Ampio: Inizia con un metodo come l'analisi tematica per mappare il panorama generale del tuo intero set di dati.

- Approfondisci: Successivamente, scegli alcune delle interviste più convincenti e applica un metodo più mirato, come l'analisi del discorso, per esaminare i dettagli sottili del linguaggio.

- Integra i Risultati: Il passo finale è spiegare come le intuizioni di ciascun metodo si supportano o persino si sfidano a vicenda, il che ti offre un'interpretazione molto più robusta e stratificata.

Combinare metodi non significa solo provare tutto per vedere cosa funziona. È una mossa strategica che ti permette di guardare i tuoi dati da più angolazioni, portando a conclusioni molto più credibili e illuminanti.

⚠️ Don’t Risk Losing Key Insights

Manual transcription wastes hours and risks errors that weaken your research. Secure, AI-powered transcripts give you a rock-solid foundation. 👉 Try it now at Transcript.LOL

Ready to turn your interviews and focus groups into clear, actionable text? Transcript.LOL provides lightning-fast, highly accurate transcriptions so you can focus on analysis, not administration. Start transcribing for free and streamline your research workflow today.